Ever since the Open Science Collaboration published the results of its large-scale replication study in 2015, thus triggering the replication crisis in psychology, it has become clear that the field of experimental research has a problem. Due to systematic p-hacking and p-harking, false-positive results could be obtained from almost any data set and could then be published in scientific journals (Simmons, Nelson, & Simonsohn, 2011). Especially in recent years, the call for transparent research and open science has become louder and louder. Luckily, transparent research is really not much more effort.

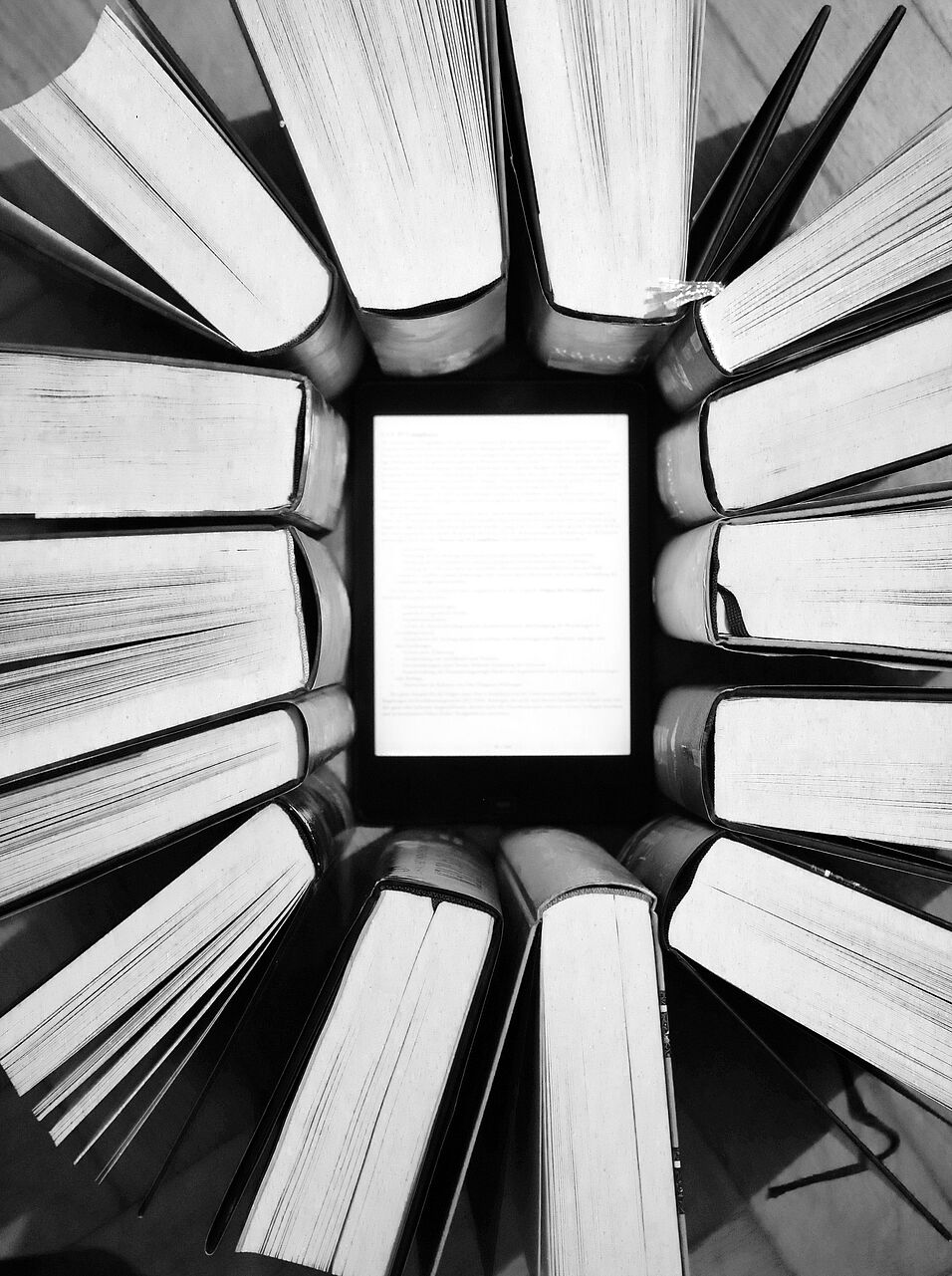

In the interdisciplinary Books on Screen project, Hajo Boomgaarden, Günther Stocker, Annika Schwabe, and Lukas Brandl deal with the consequences of digital literature reading. The data collection of their first experiment is currently underway and their corresponding study was pre-registered, i.e., the hypotheses, the methods of data collection, and also the methods of data analysis were already defined and published before the data were collected (https://osf.io/vqkc4).

More than 200 journals now offer the possibility of so-called Registered Reports. That means that before data collection, the study design is published and peer-reviewed, and the results will be published in the same journal, whether significant or not (https://cos.io/rr/).

Since the researchers of the Books on Screen Project were not yet sure in which journal they want to publish their article, they decided on the journal-independent variant of pre-registration of the Open Science Framework (https://osf.io/) which is extremely easy and free of charge. The researchers just have to upload a completed form and all relevant files to get a time stamp on them. After that, every additional change will be tracked. So yes, it is more work before collecting the data, but it is not much more additional work in the total because you have to think about your methods anyway at some point in the research process. However, it is not a problem to change the methods after uploading the original pre-registration or run additional analyses. These changes just have to be marked in the final paper.

Furthermore, the data set, like the data sets of some other projects of the Computational Communication Science Lab before, will be published via the data repository AUSSDA - The Austrian Social Science Data Archive (https://aussda.at/en/).

Open Science Collaboration (2015). Estimating the reproducibility of psychological science. Science, 349(6251), aac4716.

Simmons, J. P., Nelson, L. D., & Simonsohn, U. (2011). False-positive psychology: Undisclosed flexibility in data collection and analysis allows presenting anything as significant. Psychological Science, 22, 1359-1366.